CUDASynth

CUDASynth

All coding for standalone and integrated DGTweak() is done. Just have to test thoroughly and update the manual. Then I'll give y'all a test version.

CUDASynth

Here is test8:

* Added standalone and integrated DGTweak(). Refer to the DGDecodeNV manual for details.

* Updated the DGDecodeNV manual.

http://rationalqm.us/misc/DGDecodeNV_test8.zip

Your testing will be appreciated.

* Added standalone and integrated DGTweak(). Refer to the DGDecodeNV manual for details.

* Updated the DGDecodeNV manual.

http://rationalqm.us/misc/DGDecodeNV_test8.zip

Your testing will be appreciated.

CUDASynth

So guys, we're gonna need some form of auto levels and some form of auto color (white balance). Let's start with levels. Since the AutoLevels() plugin appears to be abandoned (maybe because the author hadn't the skills or motivation to implement HBD and multiple instantiation), what do you think of making a functionally equivalent DG filter that adds HBD, multiple instantiation, some fixes and enhancements, and a windowed mode like my Windowed Histogram Equalization filter?

Or maybe there are more pressing needs for integrated filters?

Or maybe there are more pressing needs for integrated filters?

CUDASynth

Looks like a winner to me

For interest, how would you characterize those filters vs a HDRAGC ?

For interest, how would you characterize those filters vs a HDRAGC ?

I really do like it here.

CUDASynth

Never looked at HDRAGC. I'll check it out.

CUDASynth

Some initial thoughts, first regarding levels, not color balance. Obviously, judging by so many posts and threads about this everywhere, levels correction is an important and sought-after function. Some factors/features with examples:

* contrast stretching (Avisynth Levels())

* contrast stretching with dithering (Avisynth Levels())

* automatic contrast stretching per frame (ColorYUV(autogain=true))

* automatic contrast stretching per frame modified by running average with scene-change detection (AutoLevels())

* automatic contrast stretching per luminance range, i.e., 'perceptual awareness' aka 'tone mapping' (HDRAGC())

* histogram equalization (DG histogram equalizer)

* histogram equalization with debanding (DG histogram equalizer with dithering)

* histogram equalization per local spatial areas within a frame (DG windowed histogram equalizer)

Desktop work on these implementations began circa 2003 but all appear to have been abandoned for various reasons, leaving users stuck with tools working poorly for modern video, and thirsting for updates that won't ever come. The most recent significant development I've seen on any of them ended circa 2019. As far as I can tell, they typically lack HBD support and run pitifully slowly for modern frame sizes.

So it is time to bring levels correction up-to-date for modern video standards and using modern technology, aka CUDASynth! And I'll humbly say that I am the ideal squirrel to do it.

So, with your help we are going to implement modern levels correction fulfilling the factors/features listed above, with the addition of HBD, 64-bit support, and super fast performance. Dream big, aim high!

We can be guided by existing implementations but we shouldn't be handcuffed by them. You, dear members, can help initially by informing about problems of those implementations that have resisted solution. Source video samples will be particularly valuable. That will really help us in the design phase.

* contrast stretching (Avisynth Levels())

* contrast stretching with dithering (Avisynth Levels())

* automatic contrast stretching per frame (ColorYUV(autogain=true))

* automatic contrast stretching per frame modified by running average with scene-change detection (AutoLevels())

* automatic contrast stretching per luminance range, i.e., 'perceptual awareness' aka 'tone mapping' (HDRAGC())

* histogram equalization (DG histogram equalizer)

* histogram equalization with debanding (DG histogram equalizer with dithering)

* histogram equalization per local spatial areas within a frame (DG windowed histogram equalizer)

Desktop work on these implementations began circa 2003 but all appear to have been abandoned for various reasons, leaving users stuck with tools working poorly for modern video, and thirsting for updates that won't ever come. The most recent significant development I've seen on any of them ended circa 2019. As far as I can tell, they typically lack HBD support and run pitifully slowly for modern frame sizes.

So it is time to bring levels correction up-to-date for modern video standards and using modern technology, aka CUDASynth! And I'll humbly say that I am the ideal squirrel to do it.

So, with your help we are going to implement modern levels correction fulfilling the factors/features listed above, with the addition of HBD, 64-bit support, and super fast performance. Dream big, aim high!

We can be guided by existing implementations but we shouldn't be handcuffed by them. You, dear members, can help initially by informing about problems of those implementations that have resisted solution. Source video samples will be particularly valuable. That will really help us in the design phase.

CUDASynth

Referring to AutoLevels(), some issues to be aware of from johnmeyer at doom9:

1. Scenes that are naturally supposed to be dark, like sunsets, get gained up too much.

2. Bright objects, like someone walking directly in front of the video light on a camera-mounted light, cause the main video to go pitch black.

3. The autolevels algorithm attempts to always stretch the histogram to the limits, so at least one pixel is 255 and one pixel is 0. This often results in unnatural, high contrast.

4. The midtones are not stretched enough.

The last one is the toughest. Under- or over-exposed photos and video almost always compress the midtones (a necessary by-product of not using the whole exposure range). Simply moving those pixels up or down doesn't really solve the problem. Instead, the normal "hump" that represents the center range of exposure not only needs to be moved up or down, but also stretched out. I do this with custom gamma curves when I do my own corrections, but it is tricky stuff because you end up solarizing the image if you get the slope of the curves too steep.

And frustum:

Another failure mode of autolevels() which I had never thought about is the problem of fades and blended transitions. I was unaware of this because all of my work has been on 8mm home movies, and every cut is a jump cut. I think it would be relatively easy to look for a smooth transition to/from near black and to preserve it rather than attempting to boost those dark frames. Having that larger "scene" window would make it easy to see such transitions coming.

1. Scenes that are naturally supposed to be dark, like sunsets, get gained up too much.

2. Bright objects, like someone walking directly in front of the video light on a camera-mounted light, cause the main video to go pitch black.

3. The autolevels algorithm attempts to always stretch the histogram to the limits, so at least one pixel is 255 and one pixel is 0. This often results in unnatural, high contrast.

4. The midtones are not stretched enough.

The last one is the toughest. Under- or over-exposed photos and video almost always compress the midtones (a necessary by-product of not using the whole exposure range). Simply moving those pixels up or down doesn't really solve the problem. Instead, the normal "hump" that represents the center range of exposure not only needs to be moved up or down, but also stretched out. I do this with custom gamma curves when I do my own corrections, but it is tricky stuff because you end up solarizing the image if you get the slope of the curves too steep.

And frustum:

Another failure mode of autolevels() which I had never thought about is the problem of fades and blended transitions. I was unaware of this because all of my work has been on 8mm home movies, and every cut is a jump cut. I think it would be relatively easy to look for a smooth transition to/from near black and to preserve it rather than attempting to boost those dark frames. Having that larger "scene" window would make it easy to see such transitions coming.

CUDASynth

Just brainstorming ...

Regarding AutoLevels(), it uses histograms only to determine the input_low and input_high values to be used for linear contrast stretching. In fact, it borrows the Avisynth Levels() code to make the actual transformation. There is no histogram equalization or other method (such as nonlinear stretching) to adapt to the image content. I believe our solution should offer some method(s) for adaptive mapping. The user should be able to specify the mode of operation. Perhaps one mode might be nonlinear stretching with a user-specified curve. Another might be full windowed histogram equalization and modifications thereof, e.g., lerp'ing the spatial windows between full equalization and the original image based on user-specified spatial areas, or luma ranges. A challenge will be to provide a UI that allows the user to specify appropriate curves, areas, and ranges.

Let's get something basic running first with image-adaptive contrast stretching and enhance it later for histogram equalization if it offers anything substantial over adaptive contrast stretching (it may not). I think histogram equalization may be useful for some specialized applications, such as medical and satellite terrain images, but in general produces unnatural results that are not useful for typical desktop video. We'll see.

Regarding AutoLevels(), it uses histograms only to determine the input_low and input_high values to be used for linear contrast stretching. In fact, it borrows the Avisynth Levels() code to make the actual transformation. There is no histogram equalization or other method (such as nonlinear stretching) to adapt to the image content. I believe our solution should offer some method(s) for adaptive mapping. The user should be able to specify the mode of operation. Perhaps one mode might be nonlinear stretching with a user-specified curve. Another might be full windowed histogram equalization and modifications thereof, e.g., lerp'ing the spatial windows between full equalization and the original image based on user-specified spatial areas, or luma ranges. A challenge will be to provide a UI that allows the user to specify appropriate curves, areas, and ranges.

Let's get something basic running first with image-adaptive contrast stretching and enhance it later for histogram equalization if it offers anything substantial over adaptive contrast stretching (it may not). I think histogram equalization may be useful for some specialized applications, such as medical and satellite terrain images, but in general produces unnatural results that are not useful for typical desktop video. We'll see.

CUDASynth

Need to look at Retinex also.

https://github.com/Asd-g/AviSynth-Retinex

Here's what I'm talking about regarding nonlinear curves:

https://epicedits.com/2010/02/12/nonlin ... istograms/

We could have a series of selectable standard curves scaled between selectable input_high and input_low. Also, we could allow the user to specify a custom curve (several ways to do that). Of course that is all manual. Then layered on top of that we can implement automaticity. For example, full automaticity where the filter chooses input_high/input_low and the curve. Or partial automaticity where the filter chooses input_high/input_low but the user chooses the curve.

Now what I just described covers contrast, i.e., multiplying input values by some factor. We may also be interested in brightness adjustments, i.e., adding/subtracting to/from input values. We could apply a similar approach with nonlinear brightness curves. A simple equation could combine the two adjustments:

out = contrast * in + brightness

This approach is the general direction I'm heading for. Let me know what you think of all this, guys.

https://github.com/Asd-g/AviSynth-Retinex

Here's what I'm talking about regarding nonlinear curves:

https://epicedits.com/2010/02/12/nonlin ... istograms/

We could have a series of selectable standard curves scaled between selectable input_high and input_low. Also, we could allow the user to specify a custom curve (several ways to do that). Of course that is all manual. Then layered on top of that we can implement automaticity. For example, full automaticity where the filter chooses input_high/input_low and the curve. Or partial automaticity where the filter chooses input_high/input_low but the user chooses the curve.

Now what I just described covers contrast, i.e., multiplying input values by some factor. We may also be interested in brightness adjustments, i.e., adding/subtracting to/from input values. We could apply a similar approach with nonlinear brightness curves. A simple equation could combine the two adjustments:

out = contrast * in + brightness

This approach is the general direction I'm heading for. Let me know what you think of all this, guys.

CUDASynth

I have decided on a plan. Everything to be done in CUDASynth of course. That gets us speed, HBD,

and Vapoursynth support.

1. Implement a basic DGLevels() filter based on Avisynth+ Levels().

2. Enhance to generalize the gamma control into generalized curves (gamma is just a special curve).

3. Enhance to include brightness curves.

4. Implement automaticity.

5. Possibly implement spatial area functionality (windowed contrast adjustment).

6. Possibly implement histogram equalization/windowed histogram equalization.

7. Move onto the color domain: white balance etc.

I've had a pretty good look at AutoLevels() and while there are things to like about it there are also things not to like, so I won't be drawing much guidance from it. I am going to implement my own ideas on automaticity keeping in mind the problem areas mentioned in earlier posts.

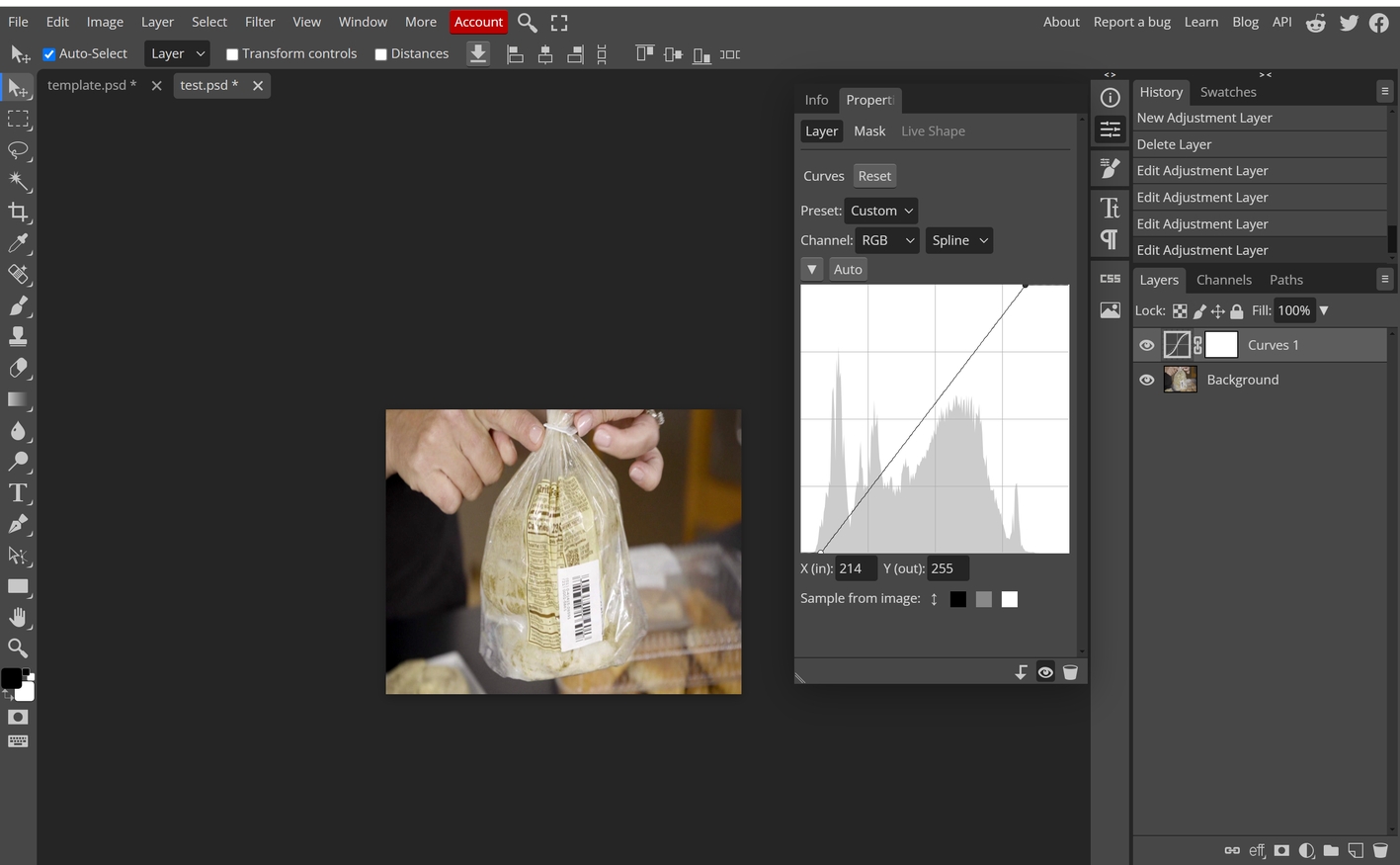

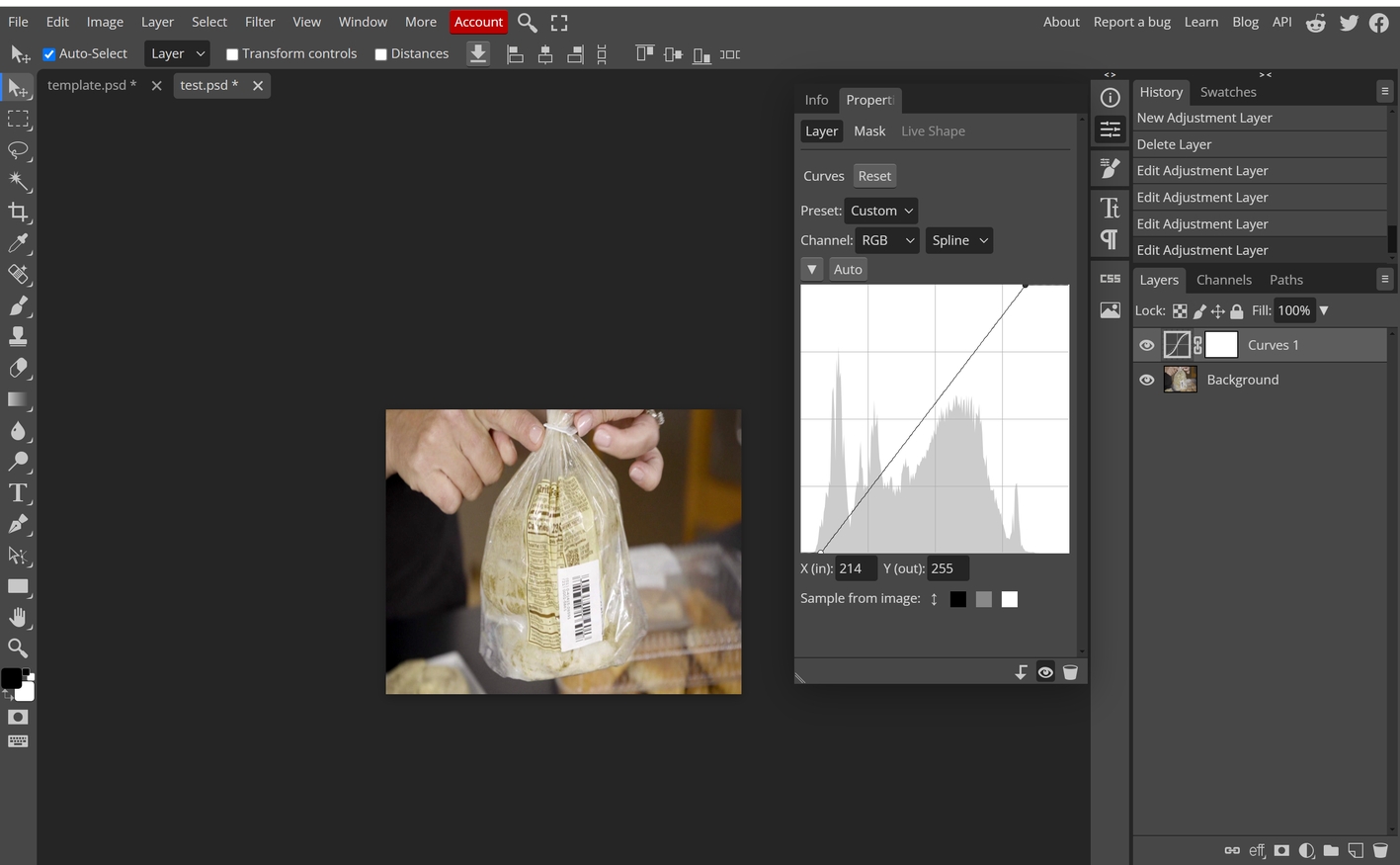

Do you guys know about https://www.photopea.com? Here is a basic contrast curve implementing what you get from Levels() with just input_low and input_high. You can add points and see the effect of different curves. It's like the VirtualDub levels filter UI on steroids.

and Vapoursynth support.

1. Implement a basic DGLevels() filter based on Avisynth+ Levels().

2. Enhance to generalize the gamma control into generalized curves (gamma is just a special curve).

3. Enhance to include brightness curves.

4. Implement automaticity.

5. Possibly implement spatial area functionality (windowed contrast adjustment).

6. Possibly implement histogram equalization/windowed histogram equalization.

7. Move onto the color domain: white balance etc.

I've had a pretty good look at AutoLevels() and while there are things to like about it there are also things not to like, so I won't be drawing much guidance from it. I am going to implement my own ideas on automaticity keeping in mind the problem areas mentioned in earlier posts.

Do you guys know about https://www.photopea.com? Here is a basic contrast curve implementing what you get from Levels() with just input_low and input_high. You can add points and see the effect of different curves. It's like the VirtualDub levels filter UI on steroids.

CUDASynth

Got the DGLevels() CUDA standalone filter working. I added a new chroma parameter not in Avisynth Levels(). If chroma=1 levels is applied to the U and V planes as in Avisynth Levels(). If chroma=0 then the U and V planes are passed through unchanged. I had seen some posting about people wanting U and V passed through and having to use MergeChroma() etc. So I thought why not just offer the option.

I'll make the integrated version and then give y'all a test version. Then it's on to step 2 of the plan.

I'll make the integrated version and then give y'all a test version. Then it's on to step 2 of the plan.

CUDASynth

Here is test9 with a new DGLevels() filter. Refer to the updated manual for details. Your feedback will be appreciated.

I think I might first do step 4 with what we have and then come back to steps 2 and 3.

http://rationalqm.us/misc/DGDecodeNV_test9.zip

I think I might first do step 4 with what we have and then come back to steps 2 and 3.

http://rationalqm.us/misc/DGDecodeNV_test9.zip

CUDASynth

I wanted to know better what HDRAGC() is doing so I scrounged up some old source code and managed to build it in 64-bit and get it running under Avisynth+. Here are some examples (the images are from my VirtualDub Histogram and Windowed Histogram filters web pages). For each example, the before is first and after HDRAGC is second. I make some comments at the end.

hdragc(max_gain=20,min_gain=10,coef_gain=10,max_sat=1)

hdragc(max_gain=20,min_gain=10,coef_gain=10,max_sat=1)

While the filter is adaptive over the image, its goal and design is only to bring up dark areas without blowing out bright areas. So it cannot reproduce what my Windowed Histogram filter can produce:

It's a bit surprising to me why the author limited it to only brightening dark areas and not darkening light areas. The academic paper he cited as his inspiration is not so limited. To darken bright areas he recommends doing Invert() before and after HDRAGC(). Forgive my stifled guffaw. It's at least not as bad as loudly passing wind, I hope.

I also tried later versions of the filter and found that in my testing they perform worse. Possibly creeping featurism took its toll.

In summary, IMHO it's a laudable attempt at image area adaptation but it doesn't go far enough. Let's see if we can do it better.

hdragc(max_gain=20,min_gain=10,coef_gain=10,max_sat=1)

hdragc(max_gain=20,min_gain=10,coef_gain=10,max_sat=1)

While the filter is adaptive over the image, its goal and design is only to bring up dark areas without blowing out bright areas. So it cannot reproduce what my Windowed Histogram filter can produce:

It's a bit surprising to me why the author limited it to only brightening dark areas and not darkening light areas. The academic paper he cited as his inspiration is not so limited. To darken bright areas he recommends doing Invert() before and after HDRAGC(). Forgive my stifled guffaw. It's at least not as bad as loudly passing wind, I hope.

I also tried later versions of the filter and found that in my testing they perform worse. Possibly creeping featurism took its toll.

In summary, IMHO it's a laudable attempt at image area adaptation but it doesn't go far enough. Let's see if we can do it better.

- Bullwinkle

- Posts: 338

- Joined: Thu Sep 05, 2019 6:37 pm

CUDASynth

@Bullwinkle

Yes, I know that from sad personal experience. Anyway, congratulations!

Decided to proceed with step 2 before going to step 4. Anyway, automaticity needs a lot of thought.

Regarding step 2 I've stumbled on an awesome filter by ErazorTT, which pretty much does what we need:

http://avisynth.nl/index.php/GradationCurve

Here's the best part: "Public Domain".

ErazorTT

ErazorTT

So, let's start with that. I want to make these improvements:

* Implement in CUDASynth, of course. That means getting rid of the AVSI interface and making

it a pure DLL filter.

* Allow definitions of frame ranges with associated point sets. Avoids the need for Trims and all

that nonsense in the script.

* Provide a linear mode in addition to the spline mode.

* Optionally show the curve on the video and/or to going to a file. Fix uncontrolled accumulation

of plot files.

* Provide chroma/no chroma adjustment option.

* Provide curve presets as in Vapoursynth-Curve. I have ideas for more useful preset curves than

exist in Vapoursynth-Curve. Also, ability to create your own presets.

As always, your thoughts would be welcome. I'll release step 1 (basic levels filter) today.

Yes, I know that from sad personal experience. Anyway, congratulations!

Decided to proceed with step 2 before going to step 4. Anyway, automaticity needs a lot of thought.

Regarding step 2 I've stumbled on an awesome filter by ErazorTT, which pretty much does what we need:

http://avisynth.nl/index.php/GradationCurve

Here's the best part: "Public Domain".

So, let's start with that. I want to make these improvements:

* Implement in CUDASynth, of course. That means getting rid of the AVSI interface and making

it a pure DLL filter.

* Allow definitions of frame ranges with associated point sets. Avoids the need for Trims and all

that nonsense in the script.

* Provide a linear mode in addition to the spline mode.

* Optionally show the curve on the video and/or to going to a file. Fix uncontrolled accumulation

of plot files.

* Provide chroma/no chroma adjustment option.

* Provide curve presets as in Vapoursynth-Curve. I have ideas for more useful preset curves than

exist in Vapoursynth-Curve. Also, ability to create your own presets.

As always, your thoughts would be welcome. I'll release step 1 (basic levels filter) today.

CUDASynth

Hmm, looks like I'm going to have to create my own solution. ErazorTT's implementation is heavily dependent on the avsi/eval/expr interface. For example the curves outputted are in reverse polish notation. Application of the adjustment is done by expr. By the time I convert everything and implement the adjustment plus the extra features I have planned, it's essentially the same as writing my own code. I will be able to re-use the spline code and the Photoshop/Gimp import stuff, however.

CUDASynth

Released DGDecNV 254 with DGLevels() standalone and integrated into DGSource().

CUDASynth

hey Rocky i had a thought

(i know shocking)

couldn't you make a filter that does the whole cudasynth thing

with all the filters

but without DGSource()?

then the sheeple could basically have cudasynth

but with a different source filter

akshully, Britney mentioned this last night

(i know shocking)

couldn't you make a filter that does the whole cudasynth thing

with all the filters

but without DGSource()?

then the sheeple could basically have cudasynth

but with a different source filter

akshully, Britney mentioned this last night

Curly Howard

Director of EAC3TO Development

Director of EAC3TO Development

CUDASynth

I have completed a big part of step 2 of the plan (currently in C code). Here is the script:

loadplugin("...\DGDecodeNV.dll")

loadplugin("...\Curves.dll")

DGSource("qsf.dgi")

Curves(pfile="points.txt",chroma=1)

And here is the points.txt file:

P 0,0

P 32,0

P 200,255

P 255,255

You can see that it is equivalent to Levels() with input_low/input_high = 32/200. Any number of points can be defined.

Coming enhancements:

* Frame ranges with each range having its own points set:

F 0,99

P 0,0

P 32,0

P 200,255

P 255,255

F 100,199

...

F 200,EOF

...

* HBD.

* Splines.

* Photoshop/Gimp curve import.

* Show curve on the video or save to BMP file.

* Presets.

Any thoughts?

loadplugin("...\DGDecodeNV.dll")

loadplugin("...\Curves.dll")

DGSource("qsf.dgi")

Curves(pfile="points.txt",chroma=1)

And here is the points.txt file:

P 0,0

P 32,0

P 200,255

P 255,255

You can see that it is equivalent to Levels() with input_low/input_high = 32/200. Any number of points can be defined.

Coming enhancements:

* Frame ranges with each range having its own points set:

F 0,99

P 0,0

P 32,0

P 200,255

P 255,255

F 100,199

...

F 200,EOF

...

* HBD.

* Splines.

* Photoshop/Gimp curve import.

* Show curve on the video or save to BMP file.

* Presets.

Any thoughts?

CUDASynth

This brilliant idea also has the advantage that all the standalone versions could be eliminated, thereby greatly reducing the workload for development, testing, and maintenance.

CUDASynth

Cool !

Thank you for the new stuff, looking forward to trying it.

Thank you for the new stuff, looking forward to trying it.

I really do like it here.

CUDASynth

Just wondering, would that mean DGsource loading something into gpu, then it gets downloaded to CPU whilst this new "big filter, cudasynth" (BFC?) gets involved and loads it back to the gpu to process ? I wouldn't have thought so, but seek your advice.Curly wrote: ↑Sat Mar 30, 2024 4:22 amhey Rocky i had a thought

(i know shocking)

couldn't you make a filter that does the whole cudasynth thing

with all the filters

but without DGSource()?

then the sheeple could basically have cudasynth

but with a different source filter

akshully, Britney mentioned this last night

Cheers.

I really do like it here.

CUDASynth

That looks really nice.

Wondering, the original video author may have intended for some type of shade graduation left to right to remain apparent, perhaps a squirrely monster lurks in the shadows or something, and my tired old eyes don't quite perceive the result like that or I didn't properly discern your intent.

I guess you are saying that's doable and depends on some parameters (curves?) one specifies in the call. I must read the documentation you provided in the release.

Would it be possible to post the clip with the stones, for us to play with ?

I really do like it here.

CUDASynth

Not quite. DGSource() would not be used. You'd start with some other source filter, then add the proposed DGFilters() filter:

DSS()

DGFilters(tw_enable, tw_sat=1.5,...)

The ... could invoke any of the implemented CUDASynth filters. The only difference here is that instead of DGSource() just leaving the decoded frame on the GPU for further filtering, the frame would take a trip back to the CPU and then be uploaded by DGFilters() to the GPU for further filtering, all of which would take place on the GPU without coming back to the CPU until the very end. There are two advantages: 1) different source filters could be used (while retaining the advantages of CUDASynth), and 2) I wouldn't have to individually implement standalone filters; I'd just have the one do-it-all DGFilters().

The delta between DGSource() and DGFilters() would be small and once the framework is in place, it would be easy to add new filters to both, which is currently a bunch of double work.